Back on topic – Ct vs. CUDA

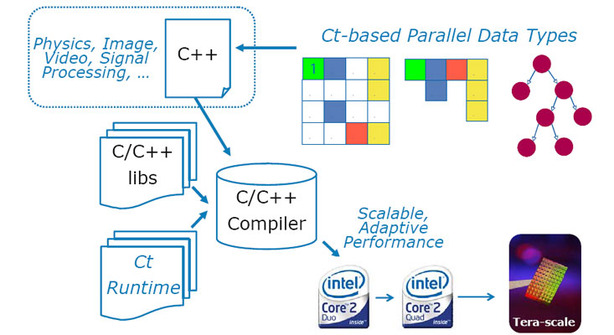

In an attempt to get back on topic, I turned attention to topic that Intel spent quite a bit of time talking about during the recent Intel Developer Forum in Shanghai, China. The one thing, aside from Larrabee of course, that really interested me was the discussion about Intel’s threaded programming model known as Ct. I asked David what he thought about Ct and whether he felt there were similarities between it and CUDA.“I think it’s interesting, but I’m not really sure what the benefit of it is at the moment,” pondered Kirk. “It looks to be much the same story as CUDA in that it achieves roughly the same thing – what I’m trying to understand is the difference between the two. There really is an infrastructure where that works, and it’s quite a bit more effort to write Ct code.

“C++ is a great buzzword but many, many programmers don’t write C++... and for that matter, many programmers don’t even write C,” Kirk explained. “C is really a pretty simple way of expressing things. We could build something like Ct on top of CUDA and it would make it more complex, but I’m not sure it would make it better at the same time – I think that’s the challenge.

“I think there’s room for a lot of attempts with Ct – but at the moment I don’t think it’s a mass market accessible approach. The whole template library approach [to programming] is very popular as an exercise but it’s not popular in companies.”

I suggested to David that he was perhaps being a little too general, tarring everyone with the same stereotypical brush. I asked him to clarify this a bit more. “Of course, it’s impossible to deny that it’s popular in some companies, but it doesn’t work in big companies. Of all the people we talk to, it doesn’t work for very many of them," he said.

Why is that the case though? “It’s more baggage and more complexity – if it was ten times simpler, you’d have a thousand times more people using it," he explained. "Oh and, by the way, we will support C++ in CUDA in the future – it’s just a matter of a little more tool development. The reason we’re adding support is because a few people are asking for it, but actually most people are not.”

We got sidetracked a little for the next five minutes or so, as our food finally arrived – once David had taken a few mouthfuls, it was time to pressure him to summarise his feelings on Ct, as there were more topics to get onto before it was time for him to go back to Imperial College for more meetings with researchers. “Oh yes,” he said. “Overall, I think that Intel is grasping for a way to make parallel programming accessible for people on CPUs and Ct is another attempt at that. If you look at Intel’s research website, there are nearly 30 things like this that they’re working on.

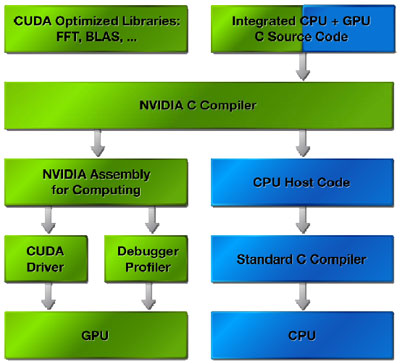

“We made a choice and we put a lot of effort and a lot of support behind CUDA and we’re confident that this is a good choice for a lot of people. You mentioned a couple of problems, like the ability to execute code on the CPU, and we try to go and solve problems that actual users would have.

“Like for example, several people have said that CUDA needs to run on the CPU as well – we can do that and we’re adding it. It also means that all the things that people ask for are being added bit by bit. I’m not sure that’s what it’s going to be like with additional feature requests in Ct and whether Intel is going to treat it as a completed product [from the outset] that it’s going to support for the next ten years. We’ve made that commitment with CUDA and we’re going to support it across all of our future products.”

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.